This essay / list of resources is intended as a companion to my Youtube video

Table of Contents

I. The Ways in Which AI Damages the Mind

II. AI is Stupid, Actually

III. Studies Regarding Doomscrolling

IV. Difficulty is Good for You

V. Cautionary Notes on Television

VI. Miscellaneous

In response to the second video I ever posted on the internet, a comment was made regarding the use of AI by writers. This comment was responding to a previous aside I had made in another thread of comments. I had stated, merely, that writers who choose to lazily make use of AI in their writing will degrade in ability rapidly, meanwhile the human writers who are constantly laboring to improve their craft will gradually become better and better. In time, the gap will widen to such a degree that AI writing will leave readers desperate for anything human made. That is to say, human writers have no need to fear AI writers.

The commenter agreed with me and added: “You’ve summarized in this sentence: ‘The good news for the rest of us is that the more lazy writers rely on AI, the more stories written without AI will shine and stand out.’ The reality of AI that none see. Creativity is an exercise; you lose it if you don’t use it.”

This comment of his has since gained over three thousand likes and over seventy replies. It has spawned its own argument, one that has lasted over a year and is still, now and again, added to. From time to time I will drop in and read through some of the replies. Many agree that AI ought to never be used. Just as many — if not more — believe that AI may be used but only in the planning or brainstorming or editing processes.

I have seen many writers say — rather hastily, as if fearing condemnation — that they do use AI but not ever to write the prose. There is a universal tone in the voice of all such people that bespeaks the instinctive sense that they know they are doing something wrong.

And they are. Very, very wrong. Although perhaps “wrong” is not quite the right word. A better word might be “damaging” or “dangerous” or even “destructive”. You might think any of this alliterative trinity to be overstated, but let me explain.

In modern popular discourse, the core of every question of wrongness when it comes to the use of AI in creative endeavors is ethics. There is the unsettling matter that generative AI harvests — with or without consent — the work of humans in order to “generate” its own “art”. This matter alone is enough to stop many people from allowing AI to write their prose or their poetry for them. Which is, I suppose, admirable to some degree. The idea that is central there, is that others ought not be wronged in the production of one’s art. A worthy ethical consideration, but it’s only a piece of the picture.

There is a reason creation is a uniquely human undertaking. When I hear a writer tell me that he is using AI to “brainstorm” or “plot” his story, he seems to believe that this is the less important aspect of the process. The prose, he has decided, is more personal and therefore ought to never be touched by a machine. However he, like so many other writers, has failed to understand that the prose is only one-tenth of the process of creation. The brainstorming and the plotting and the planning and considerations, these are the meat of the writing process. It is in this, more so than in any other part of the writer’s undertaking, that his humanness is most essential.

But what happens, I find myself asking, if we turn over this process to a machine?

I. The Ways In Which AI Damages the Mind

This study from April 2026 (???) describes an experiment conducted on students in secondary school. This is the study that shows how students who used LLMs for their assignment experienced higher satisfaction, yet had vastly inferior comprehension. The students themselves believed that the LLM usage was very helpful, despite the results. The study’s authors perceive AI usage to be positive as it increased student interest in assignments. They makes many statements using “possibly”, “potentially”, “could”, etc. when trying to predict various glitteringly optimistic outcomes of incorporating AI into the reading process, completely disregarding the results of their own study.

“And yet, using an LLM for comprehension-focused reading, even if it is with the intention to understand and learn a text, has the inherent risk of offloading the thinking process to the LLM. When learners depend excessively on LLMs for answers and explanations, they may be less inclined to employ self-explanation and elaboration strategies that are essential for comprehension and meaningful learning [...] Indeed, a recent review found that LLM use can lead to a reduction in mental effort (Deng et al., 2025), and over-use of LLMs could lead to shallow processing, where learners passively receive information without actively engaging in deep cognitive processing or critical thinking.”

(This, I believe, is the New Toy Effect™, and also the perception that a thing is “better” because it feels easier. Despite the fact that negative effects are clearly present.)

From the “Outcomes” section of the study (note: “Notes” means the student only took notes while reading; “LLM + Notes” means the student used a combination of LLM and note-taking; and “LLM” means the student only used LLM to aid in study:

“Specifically, students performed significantly better with Notes compared to LLM and with LLM + Notes compared to LLM for both literal retention and comprehension. For free recall, students showed significantly better performance with Notes compared to LLM but there was no significant difference between LLM + Notes compared to LLM.”

This study from 2025 assesses the comprehension of complex, specialized texts when readers used AI-generated simplified explainers.

This article from 2025 describes one woman’s cognitive decline after only two years of regular AI usage for work projects.

“AI is designed to replicate the solutions we might reach with more time, making it easy to delegate tasks like reasoning, problem-solving, and ensuring accuracy. At first glance, this might not seem harmful. But the truth is, the process matters more than the outcome. Destinations reached through AI shortcuts are inherently different from those achieved without it, even if they seem identical on the surface.”

and also

“I had over-relied on AI, hooked on the rush of it giving me fast answers that boosted my productivity, churning out deliverables like clockwork. I was vaping my way through prompts and answers, giving myself artificial injections of dopamine and serotonin that could have otherwise naturally come from thinking deeply about something for a long-time and finally reaching a satisfying conclusion.”

Yet another study showing how AI does indeed decrease cognitive load, but that decrease results in a corresponding decrease in reasoning and comprehension.

“Results indicated that students using LLMs experienced significantly lower cognitive load. However, despite this reduction, these students demonstrated lower-quality reasoning and argumentation in their final recommendations compared to those who used traditional search engines.”

This is the lengthy study that used EEG analysis to determine that significantly less of the brain was being used when completing a task using LLMs. This was referred to by the study authors as “cognitive debt”.

“Cognitive debt defers mental effort in the short term but results in long-term costs, such as diminished critical inquiry, increased vulnerability to manipulation, decreased creativity. When participants reproduce suggestions without evaluating their accuracy or relevance, they not only forfeit ownership of the ideas but also risk internalizing shallow or biased perspectives.”

and

“As the educational impact of LLM use only begins to settle with the general population, in this study we demonstrate the pressing matter of a likely decrease in learning skills based on the results of our study.” (emphasis added by me.)

One detail from this study describes how participants who used LLMs didn’t really feel like they had full ownership of the resulting work. Nor did they feel satisfaction with it. The ones who used their brains only were more satisfied, and those who weren’t believed that they would have done better had they had more time.

This study is over two hundred pages long and has a tremendous amount of data. Worth a glance, if nothing else.This is the interview with the French professor in which he expounds on his observations regarding AI and information.

“The widespread use of the internet and social media has already weakened our relationship with knowledge. Of course, these tools have tremendous applications in terms of access to information. But contrary to what they claim, they are less about democratising knowledge than creating a generalised illusion of knowledge.” (emphasis added by me)

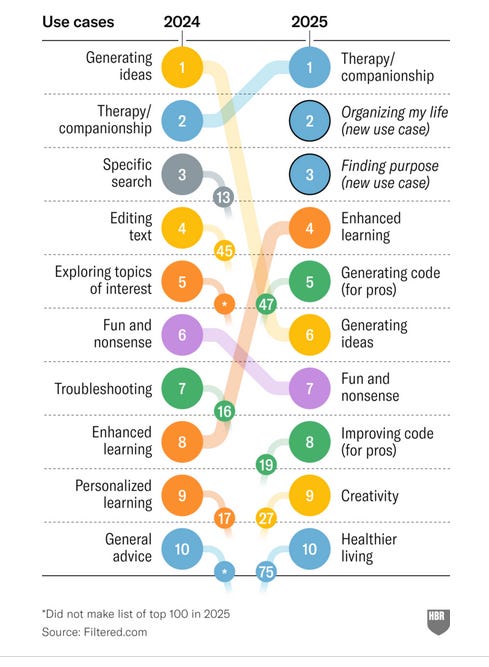

This analysis from 2024 and 2025 shows how people were using AI. For example:

This report details the various ways AI is being used in the world as of October 2025. There is a tremendous amount of data here and all of it is sourced.

This article from Authors Alliance explains how publishers can sell your work to companies looking to train their AI.

“Numerous reports recently pointed out that based on Taylor and Francis’s parent company’s market update, the British academic publishing giant has agreed to a $10 million USD AI training deal with Microsoft. Earlier this year, another major academic publisher, John Wiley and Sons, recorded $23 million in one-time revenue from a similar deal with a non-disclosed tech company. Meta even considered buying Simon & Schuster or paying $10 per book to acquire its rights portfolio for AI training.

With few exceptions (a notable one being Cambridge University Press), publishers have not bothered to ask their authors whether they approve of these agreements.”

This article also includes tips and advice and other resources for writers who find themselves working within such a publishing world.

This interesting study worked to determine whether or not AI and LLM usage produced a cognitive bias in the human doing the work. The result was that there was indeed a noticeable bias present. Other interesting factors were also noted, such as:

“It is important to mention that the topics generated by the LLMs were more generalized and did not have clear distinctions from one another. It often happened that a few topics in L [the LLM-generated topic list] had overlapping definitions. In contrast, all of the human-generated topic lists (C and H) were more distinct and clearly separated by their definitions.”

Not to oversimplify but: the human work was clearer, more precise, and better than the AI/LLM-guided work.

(This study, like so many other AI and LLM studies, also commented on how much easier the work was for the humans using AI and LLMs, and how much less “labor intensive and time consuming” the workloads were.)The study’s authors also observed that the group of humans who were working with LLM at first questioned the LLM’s generalized labels, etc. But gradually grew comfortable with them and even accepted them without any alterations.

“As Jakesch et al. (2023)1 state ‘With the emergence of large language models that produce human-like language, interactions with technology may influence not only behavior but also opinions: when language models produce some views more often than others, they may persuade their users.’”

This study on AI’s impact on critical thinking found that workers’ self-reported critical thinking was significantly reduced when using AI.

“In the majority of examples, knowledge workers perceive decreased effort for cognitive activities associated with critical thinking when using GenAI compared to not using one — examples that were reported as ‘much less effort’ or ‘less effort’ comprise 72% in Knowledge, 79% in Comprehension, 69% in Application, 72% in Analysis, 76% in Synthesis, and 55% in Evaluation dataset (See Figure 2). Moreover, knowledge workers tend to perceive that GenAI reduces the effort for cognitive activities associated with critical thinking when they have greater confidence in AI doing the tasks and possess higher overall trust in GenAI.”

The study’s authors, once again, perceive AI as inherently good (not just neutral), and are interested in the ways in which the cognitive tasks of the human workers shifted during AI usage. Something that was interesting to note was that the workers’ mental energy was focused almost entirely on verifying data and babysitting the machine. This, to my mind, leaves little room for actual critical thinking.

This study examined the incredible ease with which AI could be used to influence users’ political opinions.

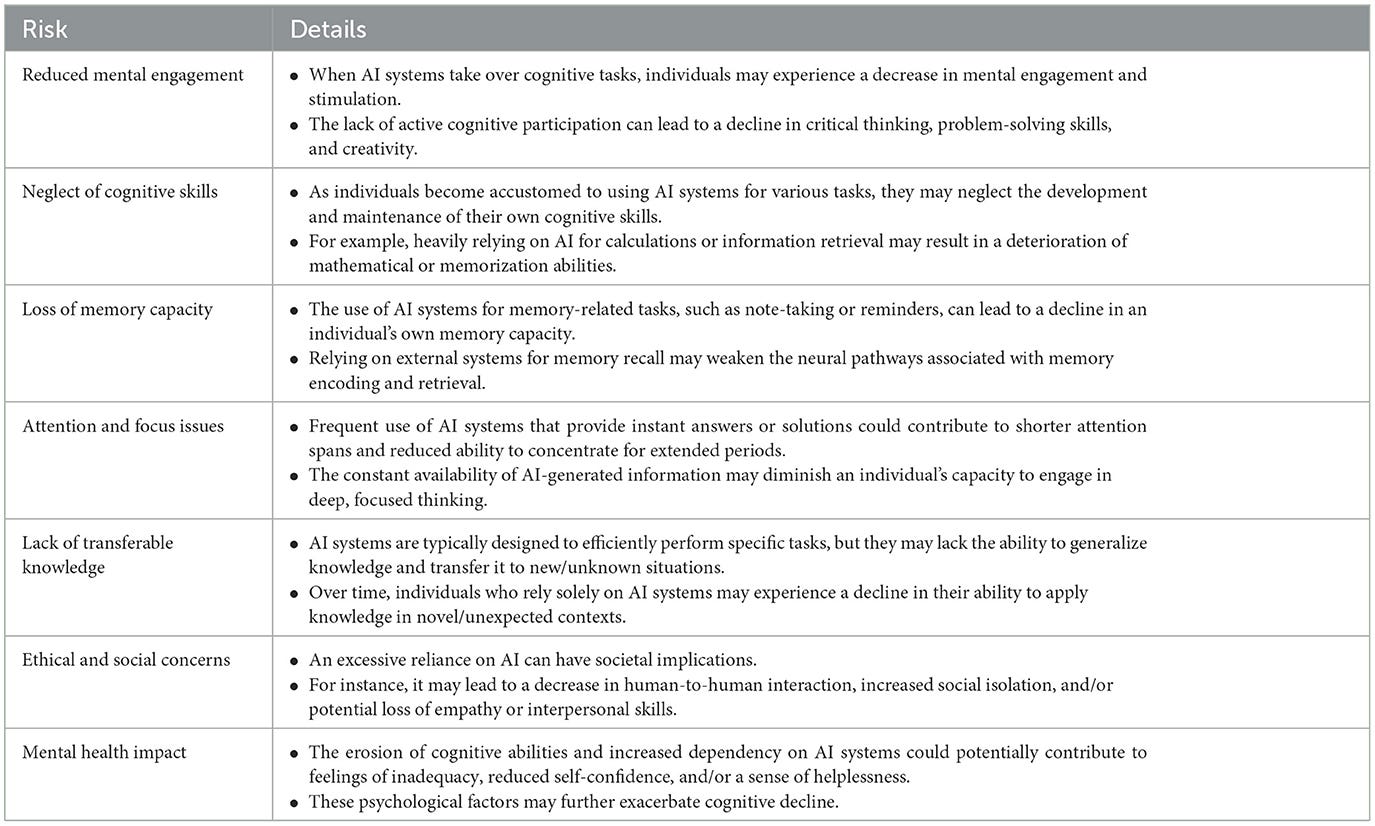

This analysis article presented the possible direct link between AI usage — particularly the use of AI chatbots — and cognitive decline. The technology and observations are all too new to perform actual scientific studies, so the purpose of the article is to propose (rather urgently) a closer scientific examination of the links the authors were reporting.

This paper from 2024 assesses the worrying tendency of AI to lie to its users.

“A more speculative risk from deception concerns human enfeeblement. As AI systems are incorporated into our daily lives at greater rates, we will increasingly allow them to make more decisions. If AI systems are expert sycophants, human users may be more likely to defer to them in decisions and may be less likely to challenge them; see Gordon2 and Wayne et al.3 for relevant research in psychology. AIs that are unwilling to be the bearers of bad news in this way may be more likely to create dulled, compliant human users.”

This paper, also from 2024, is similar in that it examines alignment faking in LLMs

This questionnaire survey conducted at the Lingnan University in Hong Kong found that a reliance on AI was having a negative effect on students, despite the fact that it was improving their work efficiency.

“The study found that, on the one hand, AI tools can significantly improve students’ academic writing efficiency. On the other hand, students’ frequent use of AI tools may cause their blind dependence on them, which triggers a series of problems such as academic self-discipline, weakening of students’ thinking, and digital ethics.”

This fascinating study conducted in 2024 highlighted the ways in which reliance on AI for writing effectively weakens and then kills the writing skills in the human. There are several quotes from the students’ self-assessments. Here are some:

“‘[…] I think I’m starting to depend on it too much. I do not really think through my ideas as much because Jenny AI does it all so fast. It’s nice, but I feel like my own thinking isn’t as strong anymore […].’ (Student A)”

and

“‘I use GPT a lot […], but now I feel like I rely on it too much. When I have to come up with my own ideas, I get stuck. GPT does the thinking for me, and I am not as confident without it.’ (Student D)”

and

“‘[…] GPT is great for generating ideas when I am stuck, but I feel like it has taken over my brainstorming process. It is making me less confident in my creativity [...]’ (Student N)”

and

“‘[…] Jasper helps me make my writing sound professional, but I feel like it’s taken away some of my creativity […] I am worried that I’m losing my personal style. I used to enjoy coming up with my own sentences, but now I just accept whatever Jasper suggests […].’ (Student J)”

and

“‘[…] Grammarly has boosted my confidence with writing […] I do not pay as much attention to the rules because I know Grammarly will fix it. It’s like I’m forgetting the basics […].’ (Student B)”

II. AI is Stupid, Actually

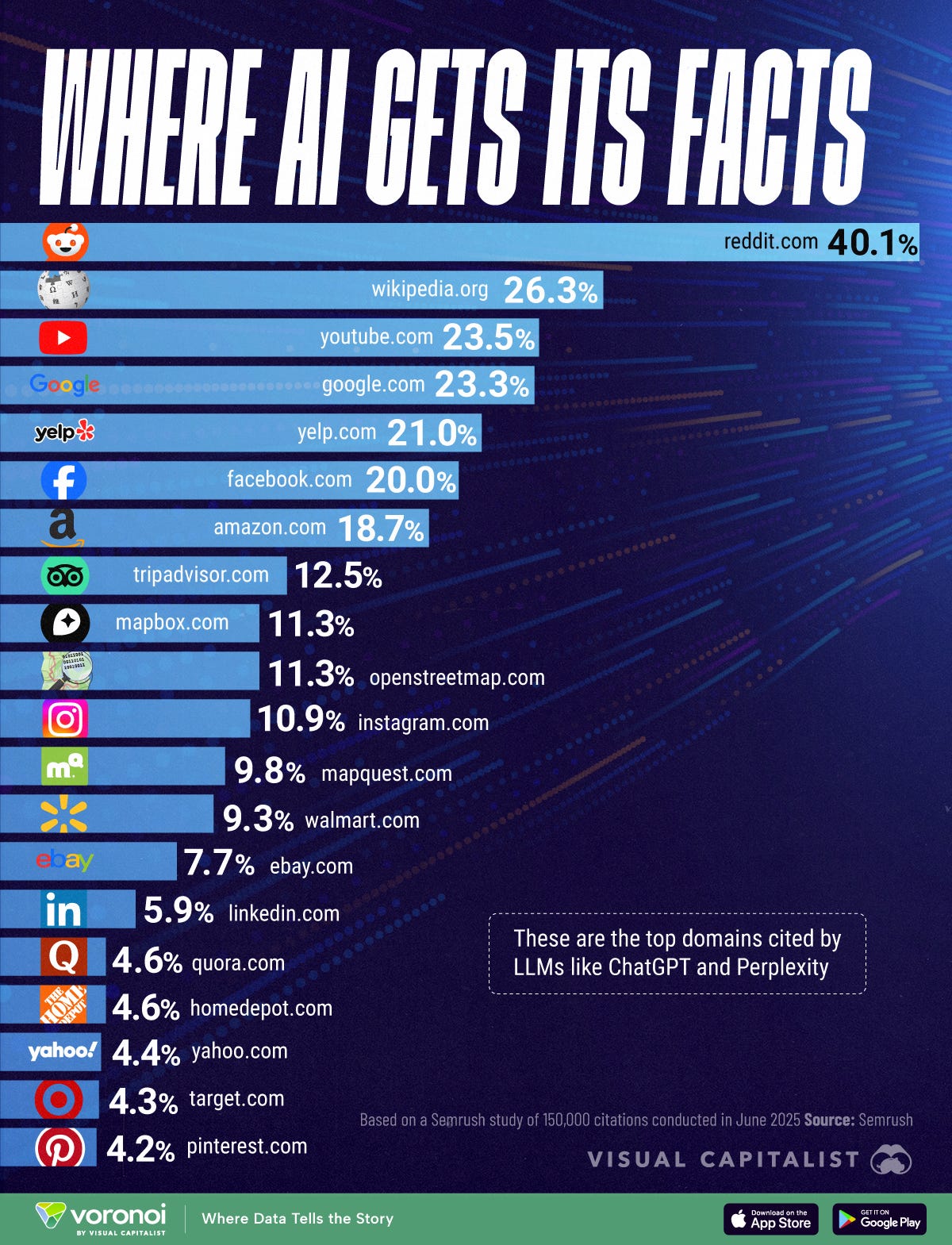

This illuminating tidbit reveals that AI’s number one source for information is Reddit. Number two? Wikipedia.

This article from The Atlantic talks about the rise of AI search engines. It gets into a factor having to do with “cognitive load”, that is, the web search is supposed to be a little difficult for the human. There’s supposed to friction on the road to acquiring information. That’s what drives knowledge acquisition and also aids in knowledge retention and curiosity. It also mentions another important set of factors: one, sometimes AI will just make stuff up. And two, the whole point of the AI search engine is to avoid having to verify data, so even though information is often wrong or even biased, people are not going to be inclined to double-check anything.

This article in Futurism reports on the noticed drop in AI usage over summer. Further analysis suggests that AI was used on a massive scale to cheat on homework. An interesting thought considering everything we’re learning about how stupid AI actually is. A chilling thought considering the fact that this means, essentially, that AI is teaching children, even if it is on the sly.

This article in Rolling Stone reports that AI fabricates academic papers. The users then fail to verify the data provided by the AI and cite the non-existent academic papers in their own work.

“Since LLMs have become commonplace tools, academics have warned that they threaten to undermine our grasp on data by flooding the zone with fraudulent content. The psychologist and cognitive scientist Iris van Rooij has argued that the emergence of AI ‘slop’ across scholarly resources portends nothing less than ‘the destruction of knowledge.’”

This article in The Guardian details a case in which a lawyer allowed ChatGPT to write a brief he filed. This brief was found to contain, among other things, references to cases that didn’t exist.

“According to [Richard] Bednar and his attorney, an ‘unlicensed law clerk’ wrote up the brief and Bednar did not ‘independently check the accuracy’ before he made the filing.”

There are countless compilations on Youtube of AI’s profound inability to grammar or even to English. But Matt Rose’s are my favorite. Here are links to a couple. Be warned, you will laugh so hard you’ll experience a cardiac event. And then you’ll remember that AI is used by professionals everywhere and you’ll cry.

III. The Dangers of Social Media

Research in these areas tends to fall into one of my personal pet peeves: conflating the Internet with social media. The internet, to my mind, is its own thing, and a very good thing. Social media is a thing on the internet. I define as social media anything that has an infinite scroll and a feed with which the user can interact by liking, commenting, etc. So, in all fairness, a huge amount of the standard internet has been gradually social-media-ified. Which makes the data even more chilling.

This 2024 study found that people who develop what they referred to as “Problematic Usage of the Internet” or PUI have also been associated with gray matter structural brain abnormalities. That is, the research and analysis suggests that “problematic internet usage” actually alters the physical brain.

This 2023 study examined the neuropsychological deficits which occur in multiple types of “disordered screen use” including social media, smartphone addiction, video game addiction, etc. They noted that there was little difference in the results when social media was excluded from the analysis. Among their findings were emotional issues, psychological issues, neurodevelopmental issues, and even physical issues.

The study did fall into the common error of lumping users together with those who were addicted (It’s my opinion that all levels of social media usage are problematic, though). It is common for people — especially older generations who did not grow up with video games — to assume that any video game usage, even reasonable, limited usage, is addiction. Just as it is common for our generation to assume that all internet usage is social media usage.

But just like non-social media internet usage, video games are neutral and even have positive effects. The most interesting part of the study, however, had to do with the neurological issues emerging in social media addiction.“Temporal neuroimaging studies investigating the effects of disordered screen use behaviours, including the excessive and problematic use of social media and smartphones, demonstrate the emergence of atypical neural cue reactivity, aberrant activity (He et al., 2018; Horvath et al., 2020; Schmitgen et al., 2020; Seo et al., 2020), and altered neural synchronisation (Park et al., 2017; Youh et al., 2017). These features are seen to persist despite pharmacological treatment.”

This 2022 study is three studies in one on what was, at the time, the relatively new idea of “doomscrolling” and the enormously negative effect it has on the user. This study did an excellent job proving the link between these mental health factors and the act of doomscrolling.

Most talk about doomscrolling and the negative impact it has on a person tends to wrap up with “tips for limiting your social media time”.

You need to stop using all social media. Right now. It’s killing your brain. All social media usage devolves into doomscrolling. All doomscrolling is harmful. And meanwhile, you gain nothing. If you have to have social media for work or a business, then only use it for work or business. Never post anything personal and never scroll. Never.

* And for those of you still posting photos of your children on social media, an investigative resource distributed to law enforcement by the FBI in the 1980s reported that pedophiles horde any and all photos of children, they don’t even have to be pornographic. They will cut pictures out of newsletters, magazines, medical articles, even clothing catalogs. In current year, social media is Candyland for pedophiles. Please think twice before posting photos of your children online.

IV. Difficulty is Good for You

I’m always surprised at how many serious academic studies I find that insist that mental effort is bad for you. This in depth meta-study, for instance, came to this conclusion after analyzing a huge number of surveys in which workers of a variety of types completed tasks that required mental effort. Many of these workers reported being distressed of uncomfortable as a result of the mental effort. Thus, the meta-study concluded, mental effort is inherently aversive. You shouldn’t need me to explain how modern society has become increasingly allergic to hard work. There are a number of factors at work here (and yes, the fact that hard jobs are rarely enough to support oneself or one’s family is definitely one of them), but I shouldn’t have to remind anyone that working hard, despite the reward or outcome, is extremely undesirable in current year. This meta-study shows me that that attitude — whatever the cause — is worryingly widespread.

Nevertheless, hard work and mental effort are actually psychologically and cognitively good for you. Our childish modern society needs to remember that we do not need our hands held in order to feel good. And that “feeling good” is never a good enough goal — but is almost always a major outcome — in completing a difficult task.

We’re adults. Sometimes we just have to roll our sleeves up and get the job done. Incredibly, doing that is often a reward in itself.

The study from 2013 talks about the positive variety of stress, a thing referred to as “eustress”, a somewhat odd word coined in 1976.

(Things like this, while helpful, suggest to me that society has become programmed to aggressively avoid difficulty, to the point that we have to gaslight ourselves into finding positive types of hardship, like “eustress” in order to allow our minds to accept it.)

Like the above, the concept of “desirable difficulties” also seems to have been engineered to help people trick themselves into allowing the presence of hardship. Because, crucially, difficulty aids in learning, as is summarized in this brief chapter.

This 2018 study analyzed the impact of imposed constraints on creativity. It was found that constraints fostered creativity, especially in problem-solving. It did highlight that the point in the process at which the constraints were identified helped the creative process, suggesting that not only is difficulty beneficial, but that an open, focused approach to those difficulties (rather that resisting or ignoring them) helps immensely.

This 2024 study assessed what is called NFC, or need for cognition. That is, the human mind needs “effortful” mental labor.

“Higher NFC was found to be associated with lower neuroticism, anxiety, negative affect, burnout, public self-consciousness, and depression and with higher positive affect, private self-consciousness, and satisfaction...”

V. For the Curious: Some Cautionary Notes on Television

This brief article refers to a CIA memo that mentions an interest in researching the use of television to deliver subliminal messages to viewers.

“A CIA memo dated November 21, 1955, notes how ‘psychologically the general lowering of consciousness during the picture facilitates the phenomenon of identification and suggestion as in hypnosis.’”

Related: This declassified CIA report on MKULTRA Subproject No. 83 talks about subliminal messaging in general.

This declassified CIA report, “The Operational Potential of Subliminal Perception” by Richard Gafford speaks at length about the possibility of using various means — including film and television — to impart certain subliminal messages in the passive minds of viewers

“The operational potential of other techniques for stimulating a person to take a specific controlled action without his being aware of the stimulus, or the source of stimulation, has in the past caught the attention of imaginative intelligence officers. Interest in the operational potential of subliminal perception has precedent in serious consideration of the techniques of hypnosis, extrasensory perception, and various forms of conditioning. By each of these techniques, it has been demonstrated, certain individuals can at certain times and under certain circumstances be influenced to act abnormally without awareness of the influence or at least without antagonism.”

Amusing Ourselves to Death: Public Discourse in the Age of Show Business, by Neil Postman

This book, originally published in 2005, speaks specifically of the way television has conditioned mankind to expect entertainment. Even our information must take the form of entertainment. Which is worth remembering when we look at all those studies on AI usage that talk about how much more fun it is to complete tasks when using AI.(the above link is an affiliate link)

(Here’s a link to read the entire book on archive.org)

From the forward:

“What Orwell feared were those who would ban books. What Huxley feared was that there would be no reason to ban a book, for there would be no one who wanted to read one. Orwell feared those who would deprive us of information. Huxley feared those who would give us so much that we would be reduced to passivity and egoism. Orwell feared that the truth would be concealed from us. Huxley feared the truth would be drowned in a sea of irrelevance. Orwell feared we would become a captive culture. Huxley feared we would become a trivial culture, preoccupied with some equivalent of the feelies, the orgy porgy, and the centrifugal bumblepuppy. As Huxley remarked in Brave New World Revisited, the civil libertarians and rationalists who are ever on the alert to oppose tyranny ‘failed to take into account man’s almost infinite appetite for distractions.’ In 1984, Huxley added, people are controlled by inflicting pain. In Brave New World, they are controlled by inflicting pleasure. In short, Orwell feared that what we hate will ruin us. Huxley feared that what we love will ruin us.

This book is about the possibility that Huxley, not Orwell, was right.”

and

“Obviously, my point of view is that the four-hundred-year imperial dominance of typography was of far greater benefit than deficit. Most of our modern ideas about the uses of the intellect were formed by the printed word, as were our ideas about education, knowledge, truth and information. I will try to demonstrate that as typography moves to the periphery of our culture and television takes its place at the center, the seriousness, clarity and, above all, value of public discourse dangerously declines.”

This 2013 study looks at the negative effects of television viewing on children. The study attempted to identify the impact television has on the actual brain structure, which is frustratingly difficult to pinpoint.

“Many cross-sectional and longitudinal studies have reported deleterious effects of television (TV) viewing on the cognitive abilities, attention, behaviors, and academic performance of children4 5 6. Longer TV viewing was associated with lower intelligence quotient (IQ) and reading grades in a cross-sectional study7. However, the longitudinal effects of TV viewing on Full Scale IQ (FSIQ) are less clear8. In an intervention study, restricting children’s TV viewing for a short period improved their cognitive abilities9 and another longitudinal study showed that TV viewing affected attention10, which in turn is correlated with a wide range of cognitive performances11. Finally, longitudinal studies have shown that TV viewing has detrimental effects on verbal abilities including verbal working memory12.”

and

“However, despite numerous related psychological and functional magnetic resonance imaging (fMRI) studies of brain activities in children watching certain content, the effects of TV viewing on brain structures in children are unknown.”

This 2025 study looks at the differences in neurocognition and brain function in people who read versus people who watch television.

“Although the causal relationships between reading, television viewing, cognition, and brain structure cannot be determined from a cross-sectional study, these findings suggest that regular reading is associated with higher cognitive function and regionally selective cortical area expansion, while television viewing has much smaller opposing associations with these same processes involving different cortical regions.”

This study examines the difference in imagination between readers and those watching visual media. And this article from the University of York summarizes the study quite well for those who might be short on time.

“Dr Suggate, from the University of York’s Department of Education, said: ‘We found that those who had been watching film clips had slightly impaired imagery for 25 seconds compared to those who had just been reading, and that this did not change depending on whether they had seen fast-moving or slow-moving images on screen.

“‘In reality this is a very small time delay, but if you look at what this means over a longer period of time - days or years of consistently consuming images on screen - then we can see that this is actually a significant impact on the brain’s ability to mentally visualise and feel.’”

V. Miscellaneous

The interview with Edward Snowden from 2013 in which he expresses his fear that his revelations would change nothing.

This is the 2003 study explaining how much of the average person’s real world framework is made up of false reality.

And for those who might be curious, this is the poor, innocent example medical article I randomly mentioned in the video: Anti-CD38 monoclonal antibody CM313 for primary immune thrombocytopenia

Maurice Jakesch, Advait Bhat, Daniel Buschek, Lior Zalmanson, and Mor Naaman. 2023. Co-writing with opinionated language models affects users’ views. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI ’23, New York, NY, USA. Association for Computing Machinery

Gordon, R.A. Impact of ingratiation on judgments and evaluations: A meta-analytic investigation, J. Pers. Soc. Psychol. 1996; 71:54-70

Wayne, S.J. ∙ Ferris, G.R. Influence tactics, affect, and exchange quality in supervisor-subordinate interactions: A laboratory experiment and field study. J. Appl. Psychol. 1990; 75:487-499

Johnson JG, Cohen P, Smailes EM, Kasen S, Brook JS. 2002. Television viewing and aggressive behavior during adolescence and adulthood. Science. 295:2468–2471

Johnson JG, Cohen P, Kasen S, Brook JS. 2007. Extensive television viewing and the development of attention and learning difficulties during adolescence. Arch Pediatr Adolesc Med. 161:480

Christakis DA, Zimmerman FJ, DiGiuseppe DL, McCarty CA. 2004. Early television exposure and subsequent attentional problems in children. Pediatrics. 113:708–713

Ridley-Johnson R, Cooper H, Chance J. 1983. The relation of children’s television viewing to school achievement and IQ. J Educ Res. 294–297

Gortmaker SL, Salter CA, Walker DK, Dietz WH. 1990. The impact of television viewing on mental aptitude and achievement: a longitudinal study. Public Opin Q. 54:594–604

Gadberry S. 1981. Effects of restricting first graders’ TV-viewing on leisure time use, IQ change, and cognitive style. J Appl Dev Psychol. 1:45–57

Landhuis CE, Poulton R, Welch D, Hancox RJ. 2007. Does childhood television viewing lead to attention problems in adolescence? Results from a prospective longitudinal study. Pediatrics. 120:532–537

Sergeant JA, Geurts H, Oosterlaan J. 2002. How specific is a deficit of executive functioning for attention-deficit/hyperactivity disorder? Behav Brain Res. 130:3–28

Zimmerman FJ, Christakis DA. 2005. Children’s television viewing and cognitive outcomes: a longitudinal analysis of national data. Arch Pediatr Adolesc Med. 159:619–625

“This illuminating tidbit reveals that AI’s number one source for information is Reddit. Number two? Wikipedia”

Gods above how horrifying.

Don't mince words, Hilary. Tell us how you really feel. 🤣